Hey there, fellow Software Architect! Ever tried explaining complex concepts to someone who doesn’t speak your language? It’s painful, right? Computers feel the same when they try understanding our nuanced human lingo. But there’s good news! Generative AI (GenAI) has a trick called Embeddings to bridge this communication gap. Today, we’ll explore the wonders of embeddings and how you can implement this exciting pattern using Microsoft technologies and C#. Ready for the adventure? Buckle up—let’s dive in!

So, What’s an Embedding Anyway?

Alright, let’s break this down in human-speak.

Imagine you’ve got a mountain of text—words, sentences, even entire documents. Now, what if I told you you could take each of those and compress them into a neat little list of numbers that actually means something to a computer? Yep, not gibberish—real, meaningful numbers. That magic trick? It’s called an embedding.

In the land of Large Language Models (LLMs)—think GPT, BERT, and their AI cousins—embeddings are like fingerprints for language. They’re numerical vectors that capture the semantic meaning of text. Not just the spelling, not just the words—but the context, the intent, the vibe.

️ Analogy Time!

Think of embeddings like dropping words onto a huge digital map. Not a road map, but a semantic map—a landscape where the distance between words tells us how closely they’re related in meaning.

Let’s say “king” lives in a fancy downtown condo. His neighbor? “Queen.” They’re royalty, after all, so it makes sense they’re close together. But if you search for “banana”—boom! You’re in the suburbs, way out by the farmer’s market. Totally different neighborhood.

In this metaphorical city:

- “Doctor” and “Nurse” might live on the same street.

- “Beach” and “Sunshine” probably share a beach house.

- “Server” (as in waiter) and “Server” (as in web server)? They might sound the same, but they live in different ZIP codes depending on context.

That’s the beauty of embeddings—they translate language into a high-dimensional vector space where related concepts are close together, and unrelated ones drift apart. And the best part? AI can navigate that space.

But How Does It Work?

Embeddings are generated using neural networks trained on massive corpora of text. The model learns patterns—like how certain words appear together, or how sentence structures vary—then it distills that knowledge into vectors. These vectors might be 300-dimensional, 768-dimensional, even 1536-dimensional, depending on the model. But you don’t need to worry about the math under the hood—just know that the numbers are smart.

For example:

- “The cat sat on the mat.” → [0.14, 0.23, …, 0.87]

- “A feline rested on the rug.” → [0.15, 0.22, …, 0.85]

The embeddings for those two sentences will be nearly identical—because semantically, they’re saying the same thing. Meanwhile, “The rocket launched into space” would live far away in the vector universe.

Why Should You Care?

Because this is how your GenAI apps understand meaning. Not just words, but what those words are trying to say. It’s how we move from clunky keyword search to intelligent semantic search. It’s how chatbots answer with intent. It’s how recommendation engines say, “Hey, if you liked that, you might love this.”

So next time someone says “embeddings,” just think: digital GPS coordinates for meaning. And you? You’re the one building the map.

Let me know if you’d like to add diagrams or illustrations to this section too—I can help create visuals that match the metaphor!

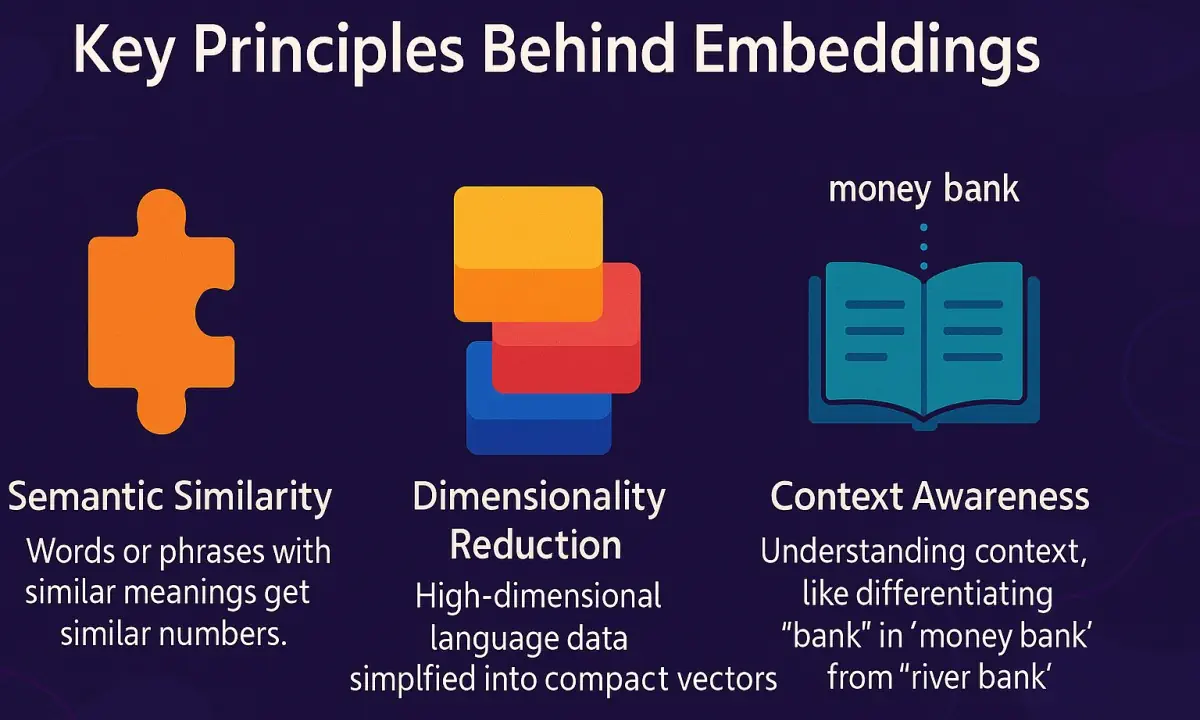

Key Principles Behind Embeddings

Here’s the core idea of why embeddings work so brilliantly:

- Semantic Similarity: Words or phrases with similar meanings get similar numbers.

- Dimensionality Reduction: High-dimensional language data simplified into compact vectors.

- Context Awareness: Understanding context, like differentiating “bank” in “money bank” from “river bank.”

Let’s visualize these:

When Should You Use Embeddings?

Thinking, “Cool theory, but how do I use it practically?” Here’s your answer:

- Semantic Search: Go beyond keywords. Like searching “cute dog pics” and getting adorable puppies even without exact tags.

- Recommendations: Suggest similar items based on meaning, not just text matches.

- Clustering & Classification: Automatically group or classify texts meaningfully.

- Question Answering: Fetch documents based on relevance, not keyword match alone.

- Anomaly Detection: Identify odd texts in large datasets by their unusual semantic positions.

If your GenAI project demands understanding, not just plain text matching, embeddings are your superhero!

️ Breaking Down the Embeddings Pattern Components

To leverage embeddings, you’ll need these essentials:

- Embedding Model: Your AI’s brain, converting text into numerical vectors.

- Embedding Vector: The numeric representation itself.

- Vector Database: Efficiently stores embeddings for quick search and comparison.

- Similarity Metric: Measures semantic closeness (e.g., cosine similarity).

Time for Code! Embeddings Implementation in C#

Let’s see embeddings in action with a practical C# example. Suppose you’re using Azure’s OpenAI Service. Here’s a simplified but powerful implementation:

C# Embeddings Example:

using System;

using System.Threading.Tasks;

// Interface for embedding service

public interface IEmbeddingService

{

Task<float[]> GenerateEmbeddingAsync(string text);

}

// Simple usage demo

public class EmbeddingsDemo

{

private readonly IEmbeddingService _embeddingService;

public EmbeddingsDemo(IEmbeddingService embeddingService)

{

_embeddingService = embeddingService;

}

public async Task RunDemoAsync()

{

string sentence1 = "The dog chased the ball.";

string sentence2 = "A puppy played fetch.";

string sentence3 = "Stars shine brightly at night.";

var embed1 = await _embeddingService.GenerateEmbeddingAsync(sentence1);

var embed2 = await _embeddingService.GenerateEmbeddingAsync(sentence2);

var embed3 = await _embeddingService.GenerateEmbeddingAsync(sentence3);

Console.WriteLine($"Similarity dog-ball vs puppy-fetch: {CosineSimilarity(embed1, embed2):F4}");

Console.WriteLine($"Similarity dog-ball vs stars-night: {CosineSimilarity(embed1, embed3):F4}");

}

// Calculate cosine similarity

private double CosineSimilarity(float[] vecA, float[] vecB)

{

double dotProduct = 0, magA = 0, magB = 0;

for (int i = 0; i < vecA.Length; i++)

{

dotProduct += vecA[i] * vecB[i];

magA += vecA[i] * vecA[i];

magB += vecB[i] * vecB[i];

}

return dotProduct / (Math.Sqrt(magA) * Math.Sqrt(magB));

}

}

// Mock embedding service for demo purposes

public class MockEmbeddingService : IEmbeddingService

{

public Task<float[]> GenerateEmbeddingAsync(string text)

{

float[] mockData = text.Contains("dog") ? new float[] {0.1f, 0.2f} :

text.Contains("puppy") ? new float[] {0.11f, 0.19f} :

new float[] {0.5f, 0.9f};

return Task.FromResult(mockData);

}

}

public class Program

{

static async Task Main(string[] args)

{

var embeddingService = new MockEmbeddingService();

var demo = new EmbeddingsDemo(embeddingService);

await demo.RunDemoAsync();

}

}Different Ways to Implement Embeddings (with C# Examples)

There’s more than one way to implement embeddings, especially if you’re using Microsoft technologies. Here’s a quick overview:

1️⃣ Using Azure OpenAI Service

Azure OpenAI provides powerful, pre-trained models ready to roll. Perfect if you prefer plug-and-play functionality!

using Azure;

using Azure.AI.OpenAI;

public class AzureEmbeddingService : IEmbeddingService

{

private readonly OpenAIClient _client;

private readonly string _deploymentName;

public AzureEmbeddingService(string endpoint, string apiKey, string deploymentName)

{

_client = new OpenAIClient(new Uri(endpoint), new AzureKeyCredential(apiKey));

_deploymentName = deploymentName;

}

public async Task<float[]> GenerateEmbeddingAsync(string text)

{

var response = await _client.GetEmbeddingsAsync(_deploymentName, new EmbeddingsOptions(text));

return response.Value.Data[0].Embedding.ToArray();

}

}2️⃣ Local Embeddings with ML.NET

If cloud isn’t your style, ML.NET provides an option to run embeddings locally (though more limited).

using Microsoft.ML;

using Microsoft.ML.Transforms.Text;

public class LocalEmbeddingService : IEmbeddingService

{

private readonly PredictionEngine<TextData, EmbeddingData> _predictor;

public LocalEmbeddingService()

{

var context = new MLContext();

var pipeline = context.Transforms.Text.FeaturizeText("Embedding", nameof(TextData.Text));

var model = pipeline.Fit(context.Data.LoadFromEnumerable(new List<TextData>()));

_predictor = context.Model.CreatePredictionEngine<TextData, EmbeddingData>(model);

}

public Task<float[]> GenerateEmbeddingAsync(string text)

{

var prediction = _predictor.Predict(new TextData { Text = text });

return Task.FromResult(prediction.Embedding);

}

}

public class TextData { public string Text { get; set; } }

public class EmbeddingData { public float[] Embedding { get; set; } }These examples show two different paths: cloud or local—both easily integrated into your projects!

Practical Use Cases: Where Embeddings Shine!

Wondering how embeddings actually impact the real world? Check this out:

- Semantic Search: Ditch keyword-based search. Give your users results based on meaning, not exact word matches.

- Intelligent Chatbots: Help bots grasp real user intent, providing smarter responses.

- Personalized Recommendations: Use semantic similarity to suggest products, articles, or videos that resonate with user preferences.

- Customer Sentiment Analysis: Group feedback by feelings, not just by specific words.

- Fraud & Anomaly Detection: Spot unusual text or behaviors that don’t fit typical patterns.

Embeddings aren’t a solution hunting for a problem—they’re your secret weapon for genuinely intelligent software.

Anti-Patterns: Don’t Make These Embeddings Mistakes!

Embeddings are awesome, but beware of these common pitfalls:

- Ignoring Context Completely: Embeddings aren’t magic; context still matters. Always factor it in.

- Wrong Model Selection: Picking a model poorly matched to your data or domain can hurt performance.

- Not Normalizing Data: Compare normalized vectors to accurately measure similarity. Don’t skip this step!

- Relying Too Much on Defaults: Models often have tweakable parameters—use them!

- Poor Data Quality: Remember, poor-quality data means poor-quality embeddings. Keep your data clean.

Avoid these traps, and embeddings will treat you right!

️ Why Embeddings Rock: Advantages

Still not convinced embeddings are amazing? Here’s why you should embrace them:

- Deep Semantic Insight: Move past shallow text matches; understand the meaning behind the words.

- More Accurate Searches: Users find exactly what they mean, not just exact keyword hits.

- Flexible Applications: Easily integrate embeddings into search engines, recommenders, chatbots, and more.

- Efficiency through Dimensionality Reduction: Process complex data rapidly by reducing its complexity.

- Improved User Experience: More relevant interactions lead to happier users (and happier devs!).

Why Embeddings Aren’t Perfect: Disadvantages

Nothing’s perfect, right? Here are some embedding pitfalls:

- Computational Expense: Heavy computing required, especially with big data.

- Model Dependence: Quality heavily depends on embedding model suitability.

- Interpretability Issues: Embeddings can seem mysterious—understanding their exact reasoning can be tough.

- Nuances Missed: Sometimes subtle context or domain-specific meanings get overlooked.

- High Storage Demands: Storing numerous large embedding vectors demands significant resources.

Embeddings are powerful but not magic wands—keep expectations realistic!

️ Anti-Patterns (Worth Repeating!)

Let’s repeat one last warning—because these are easy to trip over:

- Blind Trust in Embeddings: Always combine embeddings with business logic.

- Unvalidated Results: Just because embeddings say something is similar doesn’t always mean it is. Always validate!

- Skipping Model Optimization: Always experiment with models and settings before settling.

- Underestimating Costs: Be mindful of embedding storage and computational requirements early on.

Avoid these, and you’re already ahead of most teams!

Conclusion: Master the Embeddings Pattern, Unleash the GenAI Power!

You made it! Embeddings aren’t just abstract tech—they’re your key to building smarter, more intuitive apps. Whether you’re powering semantic search, smart recommendations, or intelligent chatbots, embedding patterns enable software architects (just like you!) to create deeply insightful solutions.

Remember, the best GenAI apps understand the meaning—not just the words. And now you have the knowledge, the tools, and C# examples to harness embeddings like a pro.

So what are you waiting for? Time to embed smarter, not harder! Dive in, experiment fearlessly, and unlock a new world of intelligent GenAI solutions.